the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

A classification technique of civil objects by artificial neural networks using estimation of entropy on synthetic aperture radar images

Vyacheslav P. Shkodyrev

grouphas been changed to

civilthroughout the text. The handling associate editor and the handling chief editor approved the changes.

The article discusses the method for the classification of non-moving civil objects for information received from unmanned aerial vehicles (UAVs) by synthetic aperture radar (SAR). A theoretical approach to analysis of civil objects can be estimated by cross-entropy using a naive Bayesian classifier. The entropy of target spots on SAR images revaluates depending on the altitude and aspect angle of a UAV. The paper shows that classification of the target for three classes able to predict with fair accuracy based on an artificial neural network. The study of results reveals an advantage compared with other radar recognition methods for a criterion of the constant false-alarm rate (PCFAR<0.01). The reliability was confirmed by checking the initial data using principal component analysis.

-

Please read the editorial note first before accessing the article.

-

Article

(1423 KB)

-

Please read the editorial note first before accessing the article.

- Article

(1423 KB) - Full-text XML

- BibTeX

- EndNote

The trend of the modern airborne radar systems for ground monitoring is the introduction of machine learning and artificial intelligence technologies (Gini, 2008). The methods using automatic detection and recognition of the objects over the underlying surface are required in the tasks of terrain mapping, aerial photography, and video fixation (Pillai et al., 2008; Soumekh, 1999). The physical principles of radar recognition are based on the received echo signals from radar contrast targets, Doppler shifts of moving objects, and changes in the polarization structure of the reflected wave (Lee and Pottier, 2009).

One of the prospective directions is the use of unmanned aerial vehicles (UAVs), which monitor the Earth's surface by synthetic aperture radar (SAR). These radar systems ensure images in real time are received at different altitudes and varying aspect angles (Moreira et al., 2013; Long et al., 2019).

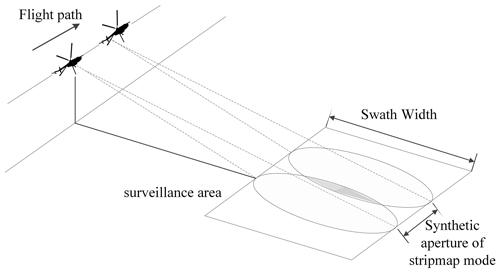

The modern development of SAR includes the use of the so-called homogeneous environment and applications for MIMO systems (Moreira et al., 2013). An important characteristic of the automatic target recognition (ATR) is a constant false-alarm rate (CFAR) (Zhoufeng et al., 2002; Jung et al., 2009), the type of radar polarization (Lee and Pottier, 2009), and SAR imaging modes (stripmap or spotlight mode). The potential accuracy can reach 0.3 m with a linear resolution using multilook processing in the spaceborne radar (Kim et al., 2014; Novak et al., 1998) (Fig. 1).

The UAV application of the SAR mode does not allow such a resolution to be achieved and, therefore, detecting objects in the region of interest (ROI) is usually difficult.

It is advisable to use spatial characteristics for the group of targets detected by UAV within the conditions of an accessible radar map (Novak et al., 1998). Such research was treated in an article (Kvasnov, 2019) where spatial features from the Terra-SAT satellite were used to detect an area with a set of the objects. A further development can be carried out as a recognition of the ground groups in a given area. Such a group can be infrastructure elements in civilian applications (town blocks, agricultural field, sea docks, etc.) (Yin et al., 2007; Moulton et al., 2008). Any vehicles (cars in parking, planes on the airstrip) can have an order of location (Halversen et al., 1994; Owirka et al., 1995). At the same time, the ROI must have a reference point in the course of UAV monitoring the terrain as a condition for civil object recognition (Labowski et al., 2016). The reference point is to be a previously identified object (for example, a road, forest, or building) (Fig. 2) (Huimin and Baoshu, 2007).

According to the concept of high-level classification (El-Darymli et al., 2016), we will consider the feature-based approach that can be implemented as a single multi-class classifier. There is a set of the mathematical models for ATR SAR:

-

Bayes classifier (Kvasnov, 2020);

-

linear discriminant function (Srinivas et al., 2014; Yu et al., 2011);

-

neural networks (Cho and Park, 2018; Ernisse et al., 1997).

The analysis of the civil targets can be implemented on based situational modelling with templates (Huimin and Baoshu, 2007). Therefore, in order to get a template of the dataset, we will use artificial neural networks based on a multilayer perceptron (El-Darymli et al., 2016; Ernisse et al., 1997). Most of these papers do not take into account the speckle of the image, which can vary depending on UAV altitude (Ullmann et al., 2018).

The purpose of the article is to consider the ATC method for the non-moving civil objects by spatial characteristics in SAR mode. We suppose the technique focuses on analysis of ROI where essential fluctuations of entropy exist after estimating entire features of image artificial neural network constructs in order to recognize the object group. The training data have been used for different resolution. Examples of SAR images (Figs. 2, 3, and 4) are demonstrated from the resource https://www.sandia.gov (last access: 5 July 2021).

Let us have a finite number of the group objects (classes) that must be classified. A set of the observations (features) is given, , which corresponded to known classes. There is an unknown transformation of the set Xm→Yn on a finite volume of the training sample . It is required to construct such an algorithm for the initial data F that provided the minimization of the loss function at the output (Wang et al., 2015):

The object classification algorithm will be constructed by using the gradient descent method. Then we define the cross-entropy as a loss function:

where H(p) and DKL(p∥q) are entropy and relative entropy (Kullback–Leibler divergence) over probability distributions p(y) and q(y), respectively; p(y) is the classification model of the binary indicator; q(y) is the predicted model of probability.

We will consider a training sample where the labels of recognition objects are fixed: Ynconst; then, H(p)=const. After rewriting, Eq. (3) as a logistic function is

The function CE(Y) tends to fit the forecasting distribution to the asymptotic value, penalizing both erroneous predictions (1−pi) and uncertain predictions (pi<1). We will use CE(Y) as a measure between the real target and noise for SAR images.

In order to estimate the efficiency of the concluded results of classification, we will use principal component analysis (Karhunen–Loève theorem). This method is defined to assess the independence of features and determine the most critical of them. Mathematical implementation is the estimation of the covariance matrix with the minimum number of elements on the main diagonal. The empirical covariance matrix can be obtained from the training sample :

The estimation of the principal components is carried out on centred data 〈xm〉, so that . The covariance matrix Cm×m is able to be represented in the canonical form (spectral matrix decomposition) of eigenvalues (Λ) and eigenvectors (V):

where V is a matrix whose columns are eigenvectors of the matrix Cm×m; is a diagonal matrix with corresponding eigenvalues on the main diagonal; V−1 is the inverse matrix to matrix V.

There are problems of seeking orthogonal projections with the maximum scattering. Then principal component vectors are an orthonormal set , which comprises eigenvectors of the covariance matrix C, allocated in decreasing order of eigenvalues . In order to estimate the number of principal components, we use the relative squared error for the first k components:

where tr(C) is the covariance matrix trace C.

After projection onto the first k principal components, it is convenient to normalize the covariance matrix by unit variance. Hence, for each coordinate this value is .

The exposal of UAV can essentially vary quality characteristics of studied SAR images. When we focus on ROI, the speckle pattern of this picture has a unique degree of entropy. According to the given condition, Eq. (2), cross-entropy of the target spot under study is able to fluctuate. We need to create a method which allows us to find the best option for extracting an informative parameter from images.

3.1 Mathematical decision

According to Eq. (1), our object is to find an estimate of the conditional probability p(Y|xi). If we make an assumption about the independence of the features xi∈Xm, then the solution can be found as a product of the naive Bayesian classifier:

where p(Y) is the average probability of all recognition classes yj∈Yn; p(xi|Y) is the likelihood function for an arbitrary feature xi, conditional on the set of classes Y being known.

If new data CE(Y|xi) enter instead of initial data, CE(Y), they would add the expected amount of uncertainty in Eq. (3):

We considered the case pi=1; then, in Eq. (3) there will remain one additive component. When we substitute Eq. (7) into Eq. (8) and simplify the equation, we will get

where is conditional entropy introduced by a feature xj provided that class yi∈Y is known.

The maximization CE(Y|xi) in Eq. (9) is defined by the influence of conditional entropy. Obviously, the extreme value in Eq. (9) can be calculated as a maximum likelihood estimation that is not a trivial task (Gini, 2008). On the other hand, it is appropriate to focus on choosing meanings xj according to experimental data.

3.2 Task application

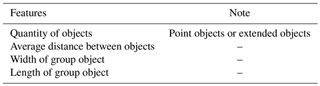

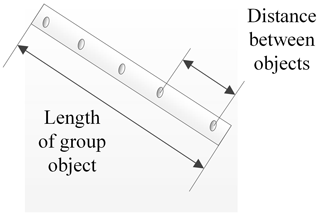

In our study, we tried to find a set of the features for the classification procedure (Table 1). These features took into account the regularity of their occurrence for the object under consideration. For example, a stretch of miscellaneous random spots has an association with the extended target. At the same time, vehicles in parking are order elements of similar spots on the SAR image.

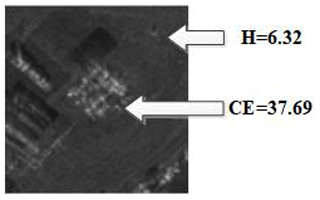

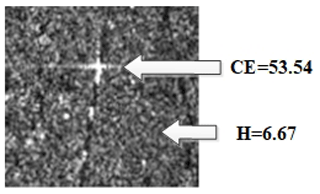

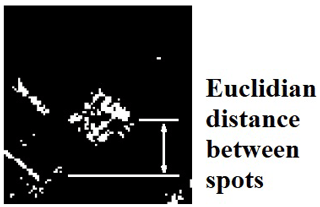

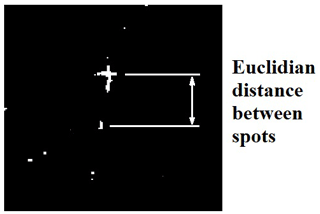

The obtained SAR images are usually presented as grey-scale pictures (Soumekh, 1999). Morphological processing makes it possible to evaluate differences between target spots and noise using the value of entropy. Hence, the binarized image allows detection of point objects by the brightness threshold and further collecting them in an extended target (Wang et al., 2015) (Figs. 3–8).

Entropy meanings are different if the speckle pattern of images is analysed. If the SAR image comes closer to the underlying terrain, then entropy is slightly higher: H1>H2. At the same time cross-entropy changes are more essential than speckle pattern CE1>CE2 (Figs. 3 and 4). Optimal result CE can be found according to Eq. (9) as a function-binarized threshold and selection of SAR images for different altitudes and aspect angles (Figs. 5 and 6) (Knott et al., 2004).

Initially, the spatial characteristics of civil objects are derived from entire target spots that are extracted from a binarized image. Then all objects are identified by the cluster analysis method (Figs. 7 and 8). We should separate objects that are used as extended targets (ETs) and single group targets (SGTs) (Zhu et al., 2004). In order to apply the condition, we use Eq. (10):

where Ω is the finite domain of the coordinate for spots in ROI; xi and yi are Cartesian coordinates of binarized target spots in ROI; dist(xi,yi) is the pairwise averaged Euclidean distance between all objects on the plane; T is the distance threshold between all binarized target spots in ROI.

It is important to emphasize that the number and size of binarized spots depend on the altitude and aspect angle of a UAV. For example, the correlation coefficient between features extracted from perfect aspect angle estimated data and from 10∘ aspect angle error data is 0.983 (Doo et al., 2017). Based on the last statement, we choose the distance threshold according to experimental data assuming the radar accuracy does not exceed 1 m per pixel (Soumekh, 1999).

The initial information of targets was obtained as an extraction of the miscellaneous spots on the binarized SAR image. The civil objects were chosen infrastructure elements (power lines and blocks of town or countryside) and vehicles (cars in parking or agricultural machinery). The number of units (classes) was . The total number of features was . The training data were composed of collections .

According to Eq. (3), the cross-entropy value can be simplified if we assume that the shift of the postulated distribution is bias[p(y0)]→1. Then the competing distributions have the distribution density

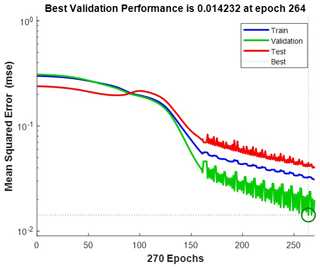

An artificial neural network was constructed by gradient descent with adaptive learning rate backpropagation. We used four hidden layers with a log-sigmoid transfer function and a linear output layer. The results of the network are shown in Figs. 9 and 10.

Figure 9Mean squared error plot as of loss function: training – 70 %, validation – 15 %, test – 15 %.

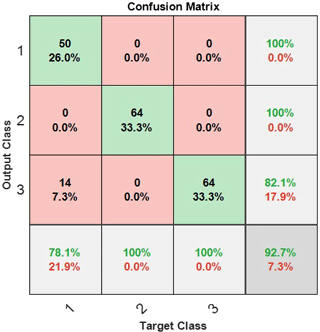

Figure 10Confusion matrix for recognition classes: 1 – power lines, 2 – block of countryside, 3 – group of vehicles.

It is seen that we have a loss function equal to MSE≈0.014 (Fig. 9), which is quite an appropriate result. There is a probability of class confusion (Fig. 10) when power lines can be detected as a group of vehicles, which then is 〈Pconf〉≈0.07. The other results do not make questions of recognition accuracy.

ATC SAR of the non-moving civil objects is illuminated in several articles. In the paper by Kim et al. (2014), the correct classification performance for the final 10- and 20-target classifiers was 77.4 % and 66.2 %, respectively (resolution 1 m per pixel). This result is demonstrably worse than the accuracy of 92.7 % that we got.

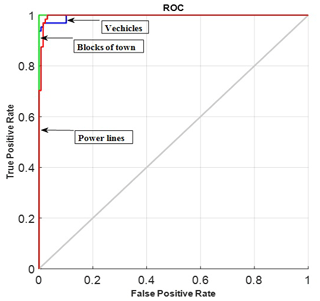

In the paper by Halversen et al. (1994), research was carried out on the recognition of group objects based on automated target cueing. Twenty-four target groups were contained within the dataset; each target group consists of 6 to 11 targets. This research applied only a pure binarized portrait without studying the SAR images that we have made. CFAR reached PCFAR≈0.29 by high resolution 1 m per pixel. We tried to analyse our data as to the false positive errors using the receiver-operating characteristic (Fig. 11).

It appears to be certain that CFAR estimation is lower than in the paper by Halversen et al. (1994) – PCFAR≤0.01. Thus, our results show a reasonable degree of reliability in recognition of the group objects using a neural network.

The approach to the recognition of the point targets is illustrated in Cho and Park (2018) and Ernisse et al. (1997). Our result (92.7 %) exceeds the value , which was achieved by using airborne radar by F-15E (Ernisse et al., 1997). The recognition accuracy of 95 % was obtained for the multiple feature-based convolutional neural network method (Cho and Park, 2018). Our result is proportional to this value, but estimation of a false positive rate is omitted that puts in doubt the efficiency of the proposed method.

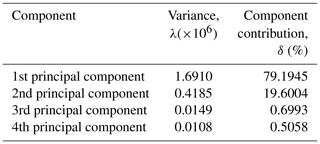

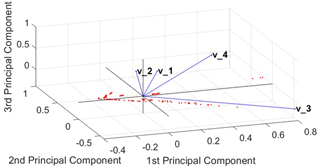

The efficiency of the object classification is proportional to the number of the features and dataset for entire classes . We tried to estimate the final result by means of the principal component analysis for the initial dataset (Kawalec et al., 2006). The original reduced training sample is transformed into an orthogonal basis according to Eq. (12), where the coordinate axes coincide with the maximum variance in descending order λmax … λmin. Each orthogonal component has the contribution on its own that is given in Table 2.

The contribution of the first two main components (out of four evaluated) is δ5>98 %. The data effectiveness can be estimated based on their projections on the three main axes (Fig. 12).

Figure 12First, second, and third principal components of the training sample: v1 – quantity of objects; v2 – average distance between objects; v3 – length of civil object; v4 – width of civil object.

Having constructed the plot, correlation is demonstrated in the orthogonal basis of the principal components. The graph shows that there is an essential independence between all the original data . The most dependent features are the length (v3) and width (v4) of the group object, respectively. Obviously, any size of spatial object is correlated between each other. Generally, there is no need to extend the space of input classes. Nevertheless, ROI requires choosing a reference point in order for all recognition groups to have disjoint zones on the terrain.

The article proposes a classification technique of the non-moving civil objects based on the estimation of entropy that was extracted from SAR images. The studied pictures received UAV at the different altitudes and aspect angles. The choice of recognition features was determined by minimizing the cross-entropy calculated for the model of the naive Bayesian classifier. It is shown that a binarized image can have a different degree of cross-entropy for the target spot with respect to the speckle pattern of images. An efficient classification approach using an artificial neural network was demonstrated.

A training set (192 observations; four features) was used for learning three classes of the non-moving civil targets – power lines, block of town, and group of vehicles. The probability of the object classification is P≈0.927 with a low degree of constant false-alarm rate PCFAR≤0.01. These indicators are equal to or exceed the other results for the similar methods.

In order to confirm the results of the classification, they were verified by using the principal component analysis. The checking showed an essential degree of decorrelation of studied features. Further research should aim to clarify the spatial characteristics by extending experimental data.

The underlying measurement data are not publicly available but can be requested from the authors if required.

AVK is a researcher of technique, modeling and data analysis and the author of the article. VPS is the administrative support.

The authors declare that they have no conflict of interest.

The author is grateful to his mother Nina Kvasnova for her support during preparation of this article.

This research has been supported by the Ministry of Science and Higher Education of the Russian Federation (World-class Research Center program: Advanced Digital Technologies) (contract no. 075-15-2020-934 dated 17 November 2020).

This paper was edited by Rosario Morello and reviewed by two anonymous referees.

Cho, J. H. and Park, C. G.: Multiple Feature Aggregation Using Convolutional Neural Networks for SAR Image-Based Automatic Target Recognition, IEEE Geosci. Remote Sens. Lett., 15, 1882–1886, https://doi.org/10.1109/LGRS.2018.2865608, 2018.

Doo, S. H., Smith, G. E., and Baker, C. J.: Aspect invariant features for radar target recognition, IET Radar Sonar Navigat., 11, 597–604, https://doi.org/10.1049/iet-rsn.2016.0075, 2017.

El-Darymli, K., Gill, E. W., Mcguire, P., Power, D., and Moloney, C.: Automatic Target Recognition in Synthetic Aperture Radar Imagery: A State-of-the-Art Review, IEEE Access, 4, 6014–6058, https://doi.org/10.1109/ACCESS.2016.2611492, 2016.

Ernisse, B. E., Rogers, S. K., DeSimio, M., and Raines, R. A.: An automatic target cuer/recognizer for tactical fighters, in: vol. 3, 1997 IEEE Aerospace Conference, Snowmass, Aspen, CO, USA, 441–455, https://doi.org/10.1109/AERO.1997.574899, 1997.

Gini, F.: Knowledge Based Radar Detection, Tracking and Classification, Wiley-Interscience publication, Canada, p. 273, 2008.

Halversen, S. D., Owirka, G. J., and Novak, L. M.: New approaches for detecting groups of targets, in: vol. 1, Proceedings of 1994 28th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 137–140, https://doi.org/10.1109/ACSSC.1994.471432, 1994.

Huimin, C. and Baoshu, W.: A Template-based Method for Force Group Classification in Situation Assessment, in: 2007 IEEE Symposium on Computational Intelligence in Security and Defense Applications, Honolulu, HI, 85–91, https://doi.org/10.1109/CISDA.2007.368139, 2007.

Jung, C. H., Yang, H. J., and Kwag, Y. K.: Local cell-averaging fast CFAR for multi-target detection in high-resolution SAR images, in: 2009 2nd Asian-Pacific Conference on Synthetic Aperture Radar, Xian, Shanxi, 206–209, https://doi.org/10.1109/APSAR.2009.5374239, 2009.

Kawalec, A., Owczarek, R., and Dudczyk, J.: Karhunen–Loeve Transformation in Radar Signal Features Processing, in: 2006 International Conference on Microwaves, Radar & Wireless Communications, Krakow, 1168–1171, 2006.

Kim, S., Cha, D., and Kim, S.: Detection of group of stationary targets using high-resolution SAR and EO images, in: IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, 1769–1772, https://doi.org/10.1109/IGARSS.2014.6946795, 2014.

Knott, E., and Shaeffer, J., Tuley, M.: Radar cross section, 2nd Edn., SciTech Publishing Inc., Boston, Canada, p. 637, 2004.

Kvasnov, A. V.: Method of Classification of Fixed Ground Objects by Radar Images with the Use of Artificial Neural Networks, in: Proceedings of the International Conference on Cyber-Physical Systems and Control (CPS&C'2019), Peter the Great St. Petersburg Polytechnic University, Saint Petersburg, Russia, 608–616, 2019.

Kvasnov, A. V.: Methodology of classification and recognition of the radar emission sources based on Bayesian programming, IET Radar Sonar Navigat., 14, 1175–1182, https://doi.org/10.1049/iet-rsn.2019.0380, 2020.

Labowski, M., Kaniewski, P., Serafin, P., and Wajszczyk, B.: Object georeferencing in UAV-based SAR terrain images, Annu. Navigat., 23, 39–52, https://doi.org/10.1515/aon-2016-0003, 2016.

Lee, J. S. and Pottier, E.: Polarimetric Radar Imaging: From Basics to Applications, in: Optical Science and Engineering, CRC Press, USA, p. 400, 2009.

Long, T., Zeng, T., Hu, C., Dong, X., Chen, L., Liu, Q., Xie, Y., Ding, Z., Li, Y., Wang, Y., and Wang, Y.: High resolution radar real-time signal and information processing, China Commun., 16, 105–133, 2019.

Moreira, A., Prats-Iraola, P., Younis, M., Krieger, G., Hajnsek, I., and Papathanassiou, K. P.: A tutorial on synthetic aperture radar, IEEE Geosci. Remote Sens. Mag., 1, 6–43, https://doi.org/10.1109/MGRS.2013.2248301, 2013.

Moulton, J., Kassam, S., Ahmad, F., Amin, M., and Yemelyanov, K.: Target and change detection in synthetic aperture radar sensing of urban structures, in: 2008 IEEE Radar Conference, Rome, 1–6, https://doi.org/10.1109/RADAR.2008.4721104, 2008.

Novak, L. M., Owirka, G. J., and Brower, W. S.: An efficient multi-target SAR ATR algorithm, in: vol. 1, Conference Record of Thirty-Second Asilomar Conference on Signals, Systems and Computers (Cat. No. 98CH36284), Pacific Grove, CA, 3–13, https://doi.org/10.1109/ACSSC.1998.750815, 1998.

Owirka, G. J., Halversen, S. D., Hiett, M., and Novak, L. M.: An algorithm for detecting groups of targets, in: Proceedings International Radar Conference, Alexandria, VA, USA, 641–643, https://doi.org/10.1109/RADAR.1995.522624, 1995.

Pillai, S. U., Li, K. Y., and Himed, B.: Space Based Radar: Theory and Applications, McGraw-Hill Education, New York, USA, p. 443, 2008.

Soumekh, M.: Synthetic aperture radar signal processing with MATLAB algorithms, Wiley, New York, USA, p. 616, 1999.

Srinivas, U., Monga, V., and Raj, R. G.: SAR Automatic Target Recognition Using Discriminative Graphical Models, IEEE Trans. Aerosp. Electron. Syst., 50, 591–606, https://doi.org/10.1109/TAES.2013.120340, 2014.

Ullmann, I., Adametz, J., Oppelt, D., Benedikter, A., and Vossiek, M.: Non-destructive testing of arbitrarily shaped refractive objects with millimetre-wave synthetic aperture radar imaging, J. Sens. Sens. Syst., 7, 309–317, https://doi.org/10.5194/jsss-7-309-2018, 2018.

Wang, Z., Du, L., Wang, F., Su, H., and Zhou, Y.: Multi-scale target detection in SAR image based on visual attention model, in: 2015 IEEE 5th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Singapore, 704–709, https://doi.org/10.1109/APSAR.2015.7306303, 2015.

Yin, D., Miao, Y., Li, G., and Cheng, B.: Multi-scale feature analysis method for bridge recognition in SAR images, in: 2007 1st Asian and Pacific Conference on Synthetic Aperture Radar, Huangshan, 517–520, https://doi.org/10.1109/APSAR.2007.4418663, 2007.

Yu, X., Li, Y., and Jiao, L. C.: SAR Automatic Target Recognition Based on Classifiers Fusion, in: 2011 International Workshop on Multi-Platform/Multi-Sensor Remote Sensing and Mapping, Xiamen, 1–5, https://doi.org/10.1109/M2RSM.2011.5697404, 2011.

Zhoufeng, L., Qingwei, P., and Peikun, H.: An improved algorithm for the detection of vehicle group targets in high-resolution SAR images, in: 2002 3rd International Conference on Microwave and Millimeter Wave Technology. Proceedings, ICMMT 2002, Beijing, China, 572–575, https://doi.org/10.1109/ICMMT.2002.1187764, 2002.

Zhu, H., Lin, N., Leung, H., Leung, R., and Theodoidis, S.: Target Classification From SAR Imagery Based on the Pixel Grayscale Decline by Graph Convolutional Neural Network, IEEE Sensors Lett., 4, 7002204, https://doi.org/10.1109/LSENS.2020.2995060, 2004.

- Abstract

- Introduction

- Theoretical approach to tasks of radar recognition

- Mathematical model of recognition on SAR images

- Construct a neural network for civil objects

- Comparison with other methods

- Discussion and estimation of the results

- Conclusion

- Data availability

- Author contributions

- Competing interests

- Acknowledgements

- Financial support

- Review statement

- References

Please read the editorial note first before accessing the article.

- Article

(1423 KB) - Full-text XML

- Abstract

- Introduction

- Theoretical approach to tasks of radar recognition

- Mathematical model of recognition on SAR images

- Construct a neural network for civil objects

- Comparison with other methods

- Discussion and estimation of the results

- Conclusion

- Data availability

- Author contributions

- Competing interests

- Acknowledgements

- Financial support

- Review statement

- References