the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Integration and evaluation of the high-precision MotionCam-3D into a 3D thermography system

Miguel-David Méndez-Bohórquez

Sebastian Schramm

Robert Schmoll

Andreas Kroll

Infrared thermal imaging enables fast, accurate and non-contact measurement of temperature distributions. However, 2D representations of 3D objects often require several images to provide significant information. For such cases, 3D thermograms allow a quick temporal and spatial analysis. In this paper, the integration of an industrial high-precision 3D sensor into a 3D thermography system is presented. The performances of the existing and new systems are assessed and compared by analyzing 3D thermograms of an industry-related test object. The geometry of the obtained point cloud is evaluated by means of a non-referenced point cloud quality assessment approach. It is shown that, in the presence of the spatial resolution and the local curvature, the proposed system performs significantly better than the existing one.

- Article

(7628 KB) - Full-text XML

- BibTeX

- EndNote

Infrared thermography is a measurement technique which generates thermal images from the infrared radiation emitted by the object to be measured. These images are called thermograms, and the value of the temperature contained in each pixel is provided by the digital response of the thermal detector elements of the camera. For this purpose, the camera sensor provides radiance-proportional values, which are converted into radiation temperature values using a known emissivity and a calibration function determined by the camera manufacturer. Thermography is an attractive approach to measuring temperature. It is non-invasive, is contactless, and provides a quick response compared with contact thermometers. In addition, thermography cameras provide spatially distributed temperature fields. This technique has been used in various applications, such as in situ detection of defects in chip manufacturing (Bin et al., 2024), the monitoring of additive manufacturing processes (Gardner et al., 2022) or the detection of breast cancer (Greeshma et al., 2023). Nevertheless, 2D thermograms are of limited use in situations where the field of view of the cameras is not able to cover completely the surface of interest or there are hotspots hidden by other objects. Such a planar representation does not allow a detailed analysis and is therefore insufficient for decision-making.

Three-dimensional thermograms are an interesting alternative to overcome this limitation, and a variety of systems have been developed over the past few years. A categorization can be considered with two groups, depending on whether a 3D sensor is used to capture the geometry of the objects or whether an alternative methodology is considered for this. This is the case with Ng and Du (2005), who showed the utility of 3D thermograms for the acquisition of data to validate finite-element-method simulations. The authors presented a method for the projection of the thermograms onto a coordinate system and a reconstruction algorithm based on the octree carving technique. The results allow the surface temperature distribution of a car model to be obtained without the use of a wide set of contact thermometers. Ham and Golparvar-Fard (2013) deployed a commercial hand thermal-imaging camera for generating 3D spatio-thermal models to assess the energy performance of existing buildings. The 3D models were generated with the aid of GPU-based structure-from-motion and multi-view stereo algorithms. Sels et al. (2019) presented a computer-aided design (CAD) matching method for complex objects. The image plane of the thermal image was aligned with the virtual scene of the CAD model to subsequently map pixel-wise the temperature values onto the 3D model. With respect to the 3D sensor group, there is a clear trend towards systems configured with laser scanners and thermographic cameras. Grubišić et al. (2011) presented a system in which the concepts of active and passive thermography were exploited to obtain and inspect 3D models of a human chest for detecting breast cancer.

Lagüela et al. (2011) presented a methodology for texturing the point clouds obtained with a 3D laser scanner. Control points, visible for both the scanner and the thermal-imaging camera, were placed on the scene to obtain the geometrical relationship between the thermogram and the point cloud. Wang et al. (2013) presented a rigidly assembled system. In this case, the temperature values are not represented as RGB values but are assigned directly to the points as non-graphic values. The data from the different sensors are fused by means of a perspective reprojection, which uses the geometrical relationship of the optical components calculated from the assembly. Hellstein and Szwedo (2016) also proposed an assembled system, which was used in the context of active thermography to inspect boat hulls. The system in this case was geometrically calibrated to obtain not only the intrinsic parameters, but also the extrinsic parameters. Costanzo et al. (2014) inspected a historical building with a terrestrial laser scanner and a thermal-imaging camera. A methodology was proposed to align multiple scans of a subcentimeter resolution scanner with a single scan of a submillimeter resolution scanner. The thermal data were assigned to each point in the 3D model by means of a geometrical reprojection. A calibration procedure was carried out to obtain the parameters frame by frame, where at least 11 correspondences were matched to find the relationship between the 3D point cloud and the 2D thermal images. The system obtains the bulk information of the building from the first survey and captures the details with the second survey. The analysis in conjunction with the temperature values allows anomalies and key aspects of the structural integrity of the building to be identified. Campione et al. (2020) also presented a decoupled system in which the depth maps and the thermograms are obtained separately. A set of 3D points is obtained and then filtered by two consecutive exclusion stages to then be texturized with the temperature readings. Afterward, an extrinsic calibration is done frame by frame in order to map the thermograms to the 3D model.

In addition to laser scanners, depth cameras are also an interesting alternative for the construction of portable systems. Vidas and Moghadam (2013) presented a system which employs a ray casting method for the temperature assignment. It takes into account five weighting factors that consider the velocity of the camera, the angle between the ray and the surface normal of the vertex, and the distortions of the optics, among others. This was subsequently upgraded in Vidas et al. (2015) to achieve real-time 3D modeling. Rangel and Soldan (2014) developed a system which fuses the data obtained from a thermal-imaging camera and a low-cost depth sensor. The extrinsic calibration of the cameras was addressed with a calibration target board designed to be visible in the spectral ranges of both sensors. Ordóñez Müller and Kroll (2017) implemented the Kinect Fusion algorithm to extend the creation of 3D models. The model is represented by a volumetric grid composed of unit voxels, which are texturized with the temperature readings of a thermal-imaging camera. Even if the system yields high-3D-fidelity thermograms, objects with sizes larger than can not be modeled due to the limitation of the memory of the graphics card. Schramm et al. (2020) compared the performance of three point cloud registration algorithms, InfiniTAM (Prisacariu et al., 2017), Kintinuous (Whelan et al., 2015a) and ElasticFusion (Whelan et al., 2015b), to construct 3D thermograms. The best performance was obtained by ElasticFusion in terms of the speed of the calculation and the accuracy of the geometry, and it was therefore the most suitable option for constructing large-scale thermograms in real time. Nevertheless, the models of this system, in contrast to the results that are obtained with the 3D thermography system of Ordóñez Müller and Kroll, do not exhibit the same geometrical fidelity. Although the quality of the 3D point cloud also depends on the registration algorithm, this is mostly influenced by the technology deployed for the measurements. For the system of Schramm et al., the depth sensor can be categorized as a low-cost sensor whose accuracy (<4 cm at 2 m distance) could be inappropriate for some engineering applications.

The implementation of an industrial high-precision 3D sensor (from now on referred to as the “new system”) within the 3D thermography system described in Schramm et al. (2020) (from now on referred to as the “existing system”) is presented in the present paper, which is structured as follows. In Sect. 2, the 3D thermography system is presented. In Sect. 3, the performances of both systems are assessed and compared through an experimental evaluation. In Sect. 4, the conclusions are presented together with an outline of future work.

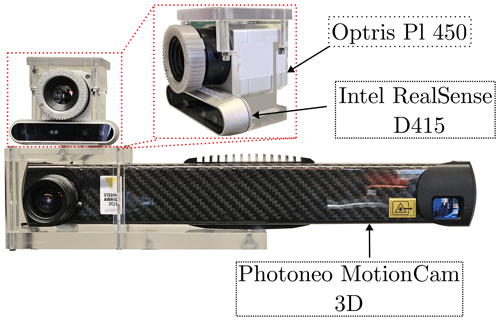

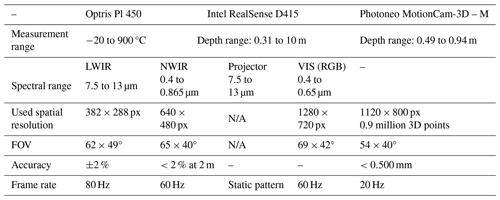

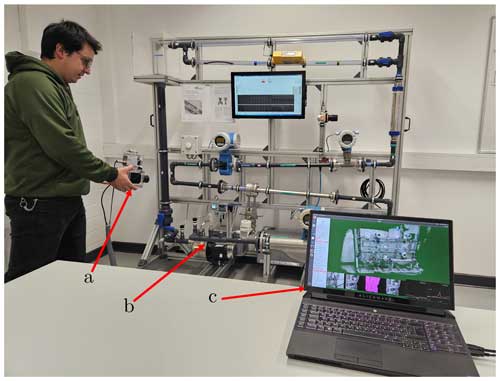

The sensor system consists of a long-wave infrared (LWIR) camera, a depth sensor and an RGB camera, which is assembled in a plexiglass frame to preserve their relative positions. For the existing system (enclosed in the red dotted square in Fig. 1), the depth measurements are carried out by the Intel RealSense D415, which operates with stereo vision technology and a projected near-infrared pattern to enrich features for the stereo matching. The new system operates with the MotionCam-3D from Photoneo, which retrieves the depth measurements from a processed parallel-structured light pattern. The color frames and the thermograms are still obtained by the Intel RealSense D415 and the Optris PI 450 (see Fig. 1). The technical data of the deployed sensors are presented in Table 1.

Table 1Technical data of the sensors deployed for the 3D thermography system (Optris-GmbH, 2024; Intel-RealSense, 2023; Photoneo, 2024). N/A: not applicable.

The 3D thermograms are generated by processing 3D points with the ElasticFusion registration algorithm developed by Whelan et al. (2015b) and extended by Schramm et al. (2020). Basically, ElasticFusion operates by deforming (enlarging) an actual point cloud model with new 3D thermal points. These points are selected from the entire depth map after iteratively searching the point candidates to be merged into the model, based on a convergence criterion of an optimization process. Furthermore, before each depth map is processed, it is texturized with the color and temperature readings, both represented with RGB maps. For that, the program reprojects the thermograms and RGB frames to the plane of the depth image (see Sect. 2.2).

These processing tasks are performed by a developed C++ (CUDA) executable. It exploits the hardware capabilities of a high-end graphics card laptop (GeForce GTX 980M, 4 GB VRAM) by implementing multithreaded computations. This architecture is preferred since it is the most suitable alternative to attain the sufficient computational speed for performing the required calculations. In Fig. 2, an example of the system in operation is presented.

Figure 2Example of operating the 3D thermography system. In the figure, panel (a) is pointing to the sensor assembly, panel (b) to the test object and panel (c) to a live view of the generated model.

Two main tasks had to be done for the integration of the MotionCam-3D. The first one was the perturbation of the color images caused by the red-light pattern of the MotionCam-3D, which was done by setting the red component of the color vector to 0. The second one was the temporal synchronization of the three cameras. As the postprocessing features of the MotionCam-3D introduce a time delay into the depth frames, the system must search backwards in the temperature and color buffers for the frames which match the last depth measurement in time. When the three images are temporally quasi-aligned, they are processed by the extended ElasticFusion algorithm.

2.1 Geometric model

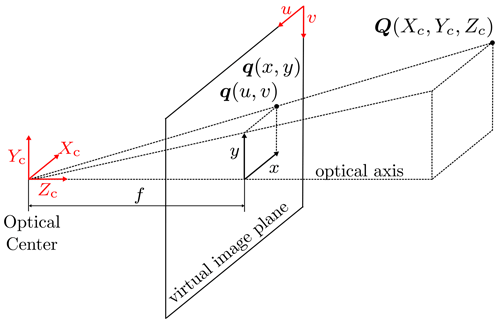

The pinhole model was employed to represent the relation between an object point Q[Xc Yc Zc] in the 3D world and the point q[u v] in the 2D image:

where the camera matrix is denoted by M, the focal length scaling factors are fu and fv (px), and the image centers are cu and cv (px), which are obtained from the intrinsic calibration of each camera: see Sect. 2.2.

Figure 3Schematic representation of the pinhole model, based on Schramm et al. (2021a).

In order to formulate the camera model with reference to an overall origin, the data collected from the color camera and the thermal-imaging camera are reprojected from the Cartesian coordinate system “csi” of each camera to a reference point “csu”, which in this case is placed at the optical center of the MotionCam-3D. The coordinates of the reference point are given by

where R is the rotation matrix and t is the translation vector, which were obtained by means of a geometrical extrinsic calibration, since it is not feasible to locate the coordinate origin of each camera by direct length measurements.

2.1.1 Point cloud texturization

As was previously mentioned, the frames of the color camera and the thermal-imaging camera are represented in the model with RGB values. In the case of the thermograms, the temperature scalar values are transformed to the RGB vectors by means of a look-up table. For the texturization of the point cloud, the frames of both cameras are first resized to the dimensions of the ones delivered by the depth camera. Afterward, these RGB-represented frames are reprojected to the overall coordinate system csu by the combination of Eq. (1) with Eq. (2):

where r corresponds to the color or thermal-imaging camera and d is the depth camera.

2.2 Geometric camera calibration

The intrinsic and extrinsic calibrations were performed using the coded target presented and discussed in Schramm et al. (2021a). This target improves the boundary detection, reduces the uncertainty of the geometric calibration, and still meets the requirement of being observable in the considered spectral range (LWIR, NWIR and VIS) with a sufficient contrast-to-noise ratio, as is explained in Schramm et al. (2021b).

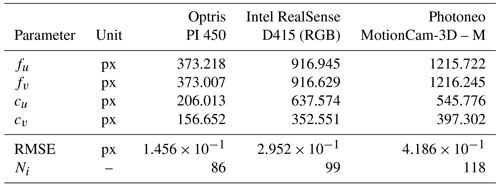

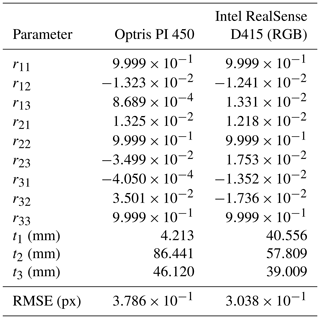

The results for the intrinsic calibration are presented in Table 2; they were obtained by considering a different number of images Ni for each camera. The root-mean-squared error (RMSE) of the Euclidean distance between the detected point position q and the reprojected point q′ was calculated to evaluate the quality of the calibration:

where Nf is the number of features to be identified. For the three cameras, values of the RMSE on the order of less than 1 pixel were obtained. Compared with the values reported in Schramm et al. (2021b) for the uncoded chessboard target, reductions of about 45 % and 33 % were achieved for the color camera and the infrared thermal-imaging camera, respectively.

The results for the extrinsic calibration are presented in Table 3. The coordinate system of the MotionCam-3D was chosen to hold the global coordinate system of the 3D thermography system. This implies that the frames recorded by the color camera and the thermal-imaging camera are reprojected to this point by Eq. (2). As with the intrinsic calibration, a reduction of the RMSE was achieved by about 10 % for the thermal-imaging camera with respect to the reported RMSE in Schramm et al. (2020). No comparisons are presented for the RGB camera since it was not extrinsically calibrated in Schramm et al. (2020).

Table 3Results of the extrinsic calibration. The values are taken from each individual camera coordinate system to the coordinate system of the MotionCam-3D.

By visual inspection, it was possible to confirm that the values found for the rotation and translation matrices describe the relative locations between the coordinate systems csu and csi.

An experimental study was carried out in order to evaluate and compare the models obtained with each system. For this purpose, a geometrical test object was inspected six times and eight times with the existing and new systems, respectively. The obtained models were analyzed qualitatively and quantitatively in order to evaluate the geometrical fidelity and the quality of the point clouds. The results presented below only correspond to one model obtained with each system, since a similar behavior is obtained from the other models.

3.1 Test object

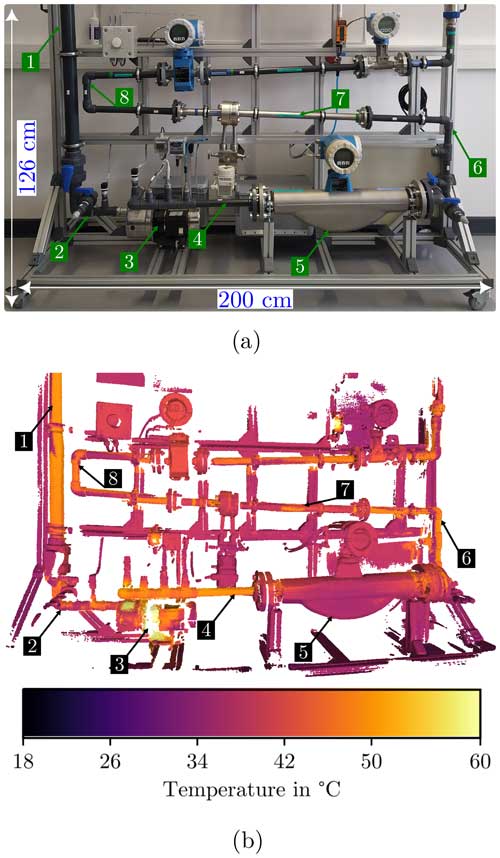

The flow measurement test bench presented in Fig. 4 was selected to be the object under study. It was chosen because, on the one hand, its design enables the study of the reproduction of both flat and curved regions of varying sizes, arranged at different distances in a non-predefined manner. On the other hand, the components of this test bench, such as pumps and instruments, which are heated by their operation, are elements found in typical industrial assemblies. Likewise, these elements and their joints, such as PVC pipes and metal flanges, are made of different materials (some of them with paint coating) whose emissivities ϵ differ notably, which causes a visual contrast in the thermograms delivered by the infrared camera. Additionally, the thermal contrast was increased for the experimental study by pumping hot water through the test bench.

Figure 4b presents a typical 3D thermogram obtained for the test object with the new system. In the 3D thermogram, one of the hottest sections of the pipeline is observed in the upper-left region (label 1 in Fig. 4a), which corresponds to the water intake point of the system. The temperature decreases slightly downstream (label 2) in the pipe leading to the pump and increases again after passing through this device (label 4). Correct temperature readings were not expected from the measurements on the metal parts, such as the body of the mass meter (label 5) or the shiny metal pipe above it (label 7), since the emissivities of these surfaces (ϵ<0.3) differ from the one set for the experimental study (ϵ=1). However, the green sticker on the metal pipe (where the arrow of label 7 points) has a similar emissivity value (ϵ>0.85). It can therefore be concluded that these readings do correspond to the surface temperature of the pipe at this point. It is also important to note that the heating of the pump motor (label 3) can also be observed in the 3D thermogram.

3.2 Qualitative assessment

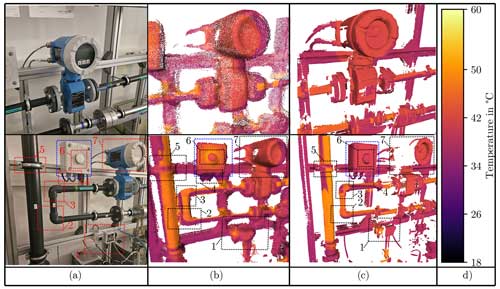

Figure 5 presents a comparison of the obtained point clouds for some regions of the test object. A visual improvement of the quality of the geometry is observed with the MotionCam-3D. In the upper row it can be noted that the new system provides straighter lines and flatter surfaces, as can be seen in the regions corresponding to the frame of the test object. Moreover, since the MotionCam-3D retrieves more points, the resolution of the model is consequently increased, enabling a better identification of the details of the object.

Exemplary details of typical scenarios of differences observed in the point clouds obtained with both systems are presented in the bottom row of Fig. 5. For the existing system, the edges of some objects are not clearly distinguishable, and the associated components can be misinterpreted. This has two main repercussions. On the one hand, a situation arises in which free regions between nearby bodies are filled with scattered points that create artificial surfaces. This can be observed when two components are merged, as in rectangle 1 with the two contiguous sensors, rectangle 2 with the parallel vertical tubes and rectangle 4 with the cables coming out of the regulator. On the other hand, the section changes are not perceived, making the contours of the objects look continuous. This is the case for rectangles 2 and 3 with the end sections of the fittings, the clamp in rectangle 5 and the knob on the regulator in rectangle 6. The fidelity of the surface alignment is addressed with the blue rectangles. The model produced by the existing system (column b) fails to retrieve a correct face orientation between the front of the regulator (where the knob is placed) and the side face. As can be observed in column c, these artifacts are no longer observed for the new system, and the representation of the measurement object is more consistent with their actual geometry (column a).

Nevertheless, it is common to observe bodies with incomplete regions in the models produced by the new system (see rectangle 7). This is due to the fact that the measurement volume of the MotionCam-3D is limited by a narrower distance compared with the one of the Intel RealSense camera. Even if with the existing system fewer points are integrated into the model, more regions can be covered with this sensor.

3.3 Quantitative assessment

The quality of point clouds can be measured through subjective and objective methods according to Dumic et al. (2018). Regarding the subjective methods, these are the well-known international standard ITU-R BT.500-13 (International Telecommunication Union/ITU-R Radiocommunication Sector of ITU, 2012), which defines a set of protocols to collect a mean opinion score, or the methods based on user interaction with the raw point clouds (Zhang et al., 2014; Alexiou and Ebrahimi, 2017).

The objective methods can be assigned to two groups. The first are recognized as full-reference methods which measure the point cloud quality using a reference model (e.g., Mekuria and Cesar, 2016; Tian et al., 2017). These are mostly used for assessing downsampled or compressed point clouds. The second are known as non-reference methods and establish quality metrics based on local geometry information (Mallet et al., 2011; Zhang et al., 2022).

In this case study, the subjective methods are not of interest, since they do not aim to measure the usability of the texturized information in the 3D thermograms, which in this case are the temperature values represented by RGB values. The full-reference methods were also not an option because it is not possible to define a ground truth that permits a fair comparison for both systems. The non-reference methods still address these two limitations and fit the study requirements, which are the establishment of indicators to evaluate the equivalence of the point clouds with the geometry of the recorded objects and the use of the 3D thermograms for further applications.

Most of the non-reference approaches are based on the analysis of the local surface variation. From a point cloud , a neighborhood PNbi of each point pi is obtained by employing the k-nearest-neighbor (k-NN) algorithm, using the Euclidean distance metric. For the following analysis, the size of P consists of and points for the existing and new systems. The key goal is to measure how close the member points are to the centroid of PNbi and to establish whether the shape of the local neighborhood conforms to the actual shape of the real object in the respective zone.

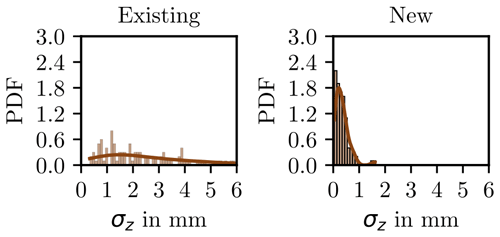

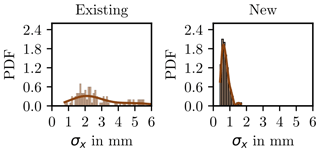

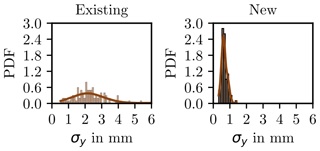

3.3.1 Dispersion of the points in the neighborhoods

One-hundred points were randomly selected to measure the spatial distribution in their respective neighborhoods. The standard deviation along each coordinate axis was computed. This was not performed on the entire point cloud because it is computationally intensive. Figures 6 to 8 present the histograms for the standard deviations σx, σy, and σz using k=10 members for each neighborhood. In all three cases, the results for the new system show a narrower distribution, with an approximate range of 1 mm, whose expected value is below 1 mm. In the case of the existing system, the range of the distribution was from 4 to 6 mm, and the most probable value tends towards 2 mm. This suggests that the new system provides improved geometric accuracy due to the local agglomeration of points in narrower neighborhoods, which is consistent throughout the model.

Figure 6Probability density function of σx for each neighborhood of 100 randomly selected points. A bin width of 0.1 mm was selected for generating the histogram. The neighborhoods consist of k=10 members.

Figure 7Probability density function of σy for each neighborhood of 100 randomly selected points. A bin width of 0.1 mm was selected for generating the histogram. The neighborhoods consist of k=10 members.

3.3.2 Local roughness

Hoppe et al. (1992) proposed a method to assess the shape of the point neighborhoods. It consists of the computation of the empirical covariance matrix Ci:

where and are Cartesian coordinate vectors and represent the jth member and the mean of the neighborhood PNbi, respectively. The eigenvector problem is solved for all points pi in P:

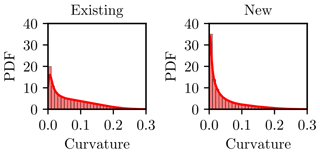

with the eigenvectors and the corresponding eigenvalues . The variation of pi along the direction of vl is represented by λl (Pauly et al., 2002), and thus the shape of the neighborhood can be evaluated. The curvature

proposed by Pauly et al. (2002), Rusu (2009) and Weinmann et al. (2013) provides information about the local roughness (Zhang et al., 2022) and was calculated for this study. The size of the neighborhoods was also set fixed at 10 members (k=10), as was done by Zhang et al. (2022) and Hackel et al. (2016). This is fair for assessing both systems since the eigenvalues do not depend on the scale, rotation or translation (Jutzi and Gross, 2009).

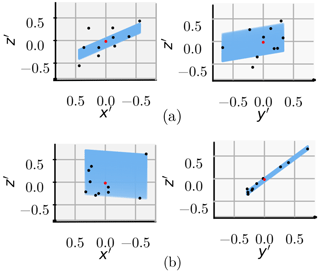

Figure 9 presents an example of a typical neighborhood obtained with both systems. The components of the coordinate vectors were redefined according to the following normalization:

where F corresponds to . The example of the new system shows that the points pi are more concentrated around the centroid and are closer to the regression plane. If a surface were reconstructed from these, it would be observed that its roughness is lower in this case. The calculation of the curvature Cur confirms this. The computation of the eigenvalues λl with Eq. (7) indicates a reduction of 3 orders of magnitude for the new system.

Figure 9Example of a typical neighborhood from the point clouds obtained with the existing (a) and new (b) systems. The units of the axes were modified according to Eq. (8). The values of for PNbi in panels (a) and (b) are and , respectively. The blue plane was obtained from a linear regression fitting, and the red point corresponds to the centroid of the neighborhood.

This is consistent with all the point clouds delivered by both systems, whose results are presented in Fig. 10. The local curvature around the registered points was notably reduced with the implementation of the MotionCam-3D. The statistical distribution of this indicator shows a trend towards the ideal value (Cur=0) of this feature for the entire data set. Correspondingly, smoother surfaces could be obtained from these point clouds, which means that a better approximation to the real object was achieved.

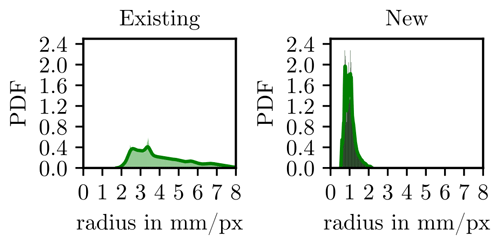

3.3.3 Sizes of the surface elements

In a strict sense, the models obtained by Elastic Fusion are conceptualized with a surface discretization entity known as Surfel (Pfister et al., 2000). These are unordered point primitives without explicit connectivity, and for the extended system, Schramm et al. (2020) combine the following attributes: position, normal vector, weight, color and temperature values textured with RGB vectors, time stamps, and radius rs. Surfel is associated with circular surface elements whose radius rs is calculated as follows:

with d the depth measurement, fu and fv the focal length scale factors (see Sect. 2.1) and the z component of the estimated normalized normal vector. During the model creation, ElasticFusion determines the value of rs to minimize the holes between neighboring surface elements (Weise et al., 2009; Keller et al., 2013; Salas-Moreno et al., 2014). The statistical distributions are presented in Fig. 11. As for the other metrics, the surface element size of the model obtained with the new system also exhibits a narrower range and an expected value towards a smaller radius. This indicates that the resolution of the models has increased with the new system by more than 2 times, as was expressed before. It would be expected that using the 3D thermograms of the new system for calculating the surface heat dissipation of inspected bodies (see Schmoll et al., 2022) should deliver more accurate results due to the possibility of obtaining a mesh with smaller elements.

This paper has presented the integration of a MotionCam-3D into a 3D thermography system. The new system was geometrically calibrated with a coded target, obtaining an overall improvement of the root-mean-squared reprojection error of the intrinsic and extrinsic calibrations, compared with the results obtained in Schramm et al. (2021b, 2020).

The performances of the existing and new systems were evaluated through a qualitative and quantitative assessment of the resulting point clouds. A flow measurement test bench was selected as a test object and therefore modeled with both systems. On the one hand, curved and flat surfaces at different sizes can be modeled from this object. On the other hand, it includes equipment and instruments distinguishable in industrial installations. To enhance the thermal contrast, hot water was made to flow through the test bench. The presented 3D thermogram showed heating of operating components and sections of the test object with relevant temperature differences.

The qualitative inspection suggests a reduction of the number of scattered points around the surfaces and better identification of the details of the body, such as the section change in the tube fittings (see Fig. 5), fewer artifact surfaces and an increase in the spatial resolution, all as a consequence of the deployment of the MotionCam-3D. This has a significant impact on the interpretability of 3D thermograms. The ability to accurately locate atypical regions in the thermal distribution of objects, such as unexpected heat or sink sources (e.g., hotspots), depends directly on the quality of the geometrical representation of the models.

The quantitative assessment was carried out with a non-reference method, calculating local statistics of point neighborhoods. A sample of 100 points was selected to estimate the spatial distribution of the point neighbors around its centroid in every neighborhood. The results showed that using the new system results in models with lower standard deviations in the three directions. This means that the greater spatial resolution of the MotionCam-3D has the expected effect of increasing the geometric accuracy of the created model. The local surface roughness was also studied for all the point clouds, considering a principal component approach. The results also showed a decrease in the local surface variation, resulting in less dispersion throughout the entire geometry.

The Surfel radius of the 3D thermograms was also considered in the evaluation. The results showed that the size of these surface units has decreased by a factor of 2 to 3. This suggests that the point clouds obtained with the new system are more useful for post-applications, such as creating meshes for calculating the surface heat dissipation and computing the measured temperature values, as presented in Schmoll et al. (2022). This will be assessed in a future work.

For future work, the following improvement of the 3D thermography system will be addressed.

-

Spatiotemporal calibration of the system: Vidas et al. (2013) presented a methodology to determine the timing offset between the clocks of the sensors. This will improve the assignment of the temperature values in the point cloud.

-

Data fusion of depth sensors: similar to Costanzo et al. (2014), the dual deployment of the Intel RealSense camera and the MotionCam-3D can be considered for obtaining point clouds. The first sensor is expected to exploit its field of view and the second its geometric accuracy.

Finally, the system will be tested in industrial scenarios in order to evaluate its performance and the usability of its results.

The results presented in the paper were obtained with the code available in the following git repository: https://doi.org/10.5281/zenodo.11355331 (Mendez, 2024). For the geometric calibration, the calibration toolbox presented in Schramm et al. (2021b) and the coded target presented in Schramm et al. (2021a) were utilized. The codes can be downloaded from the following git repositories: https://doi.org/10.5281/zenodo.11367429 (Schramm et al., 2024) and https://doi.org/10.5281/zenodo.4596313 (Schramm and Ebert, 2021).

The data used for the evaluation of the 3D thermography systems are available at https://doi.org/10.5281/zenodo.8391050 (Mendez and Schramm, 2023).

MM performed the implementation of the MotionCam-3D under the supervision of SS. MM analyzed the data and wrote the paper. RS, SS and AK revised the paper.

The contact author has declared that none of the authors has any competing interests.

Publisher’s note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This article is part of the special issue “Sensors and Measurement Science International SMSI 2023”. It is a result of the Sensor and Measurement Science International, Nuremberg, Germany, 8–11 May 2023, where a short paper (Mendez et al., 2023) was presented.

The authors are grateful to the anonymous reviewers for their feedback.

This paper was edited by Alexander Bergmann and reviewed by two anonymous referees.

Alexiou, E. and Ebrahimi, T.: On subjective and objective quality evaluation of point cloud geometry, in: 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), 1–3, IEEE, https://doi.org/10.1109/QoMEX.2017.7965681, 2017. a

Bin, Z., Jianxin, Q., Yunkang, S., Wentao, H., and Longqiu, L.: In situ monitoring of flip chip package process using thermal resistance network method and active thermography, Int. J. Heat Mass Tran., 225, 125402, https://doi.org/10.1016/j.ijheatmasstransfer.2024.125402, 2024. a

Campione, I., Lucchi, F., Santopuoli, N., and Seccia, L.: 3D Thermal Imaging System with Decoupled Acquisition for Industrial and Cultural Heritage Applications, Appl. Sci., 10, 828, https://doi.org/10.3390/app10030828, 2020. a

Costanzo, A., Minasi, M., Casula, G., Musacchio, M., and Buongiorno, M.: Combined Use of Terrestrial Laser Scanning and IR Thermography Applied to a Historical Building, Sensors, 15, 194–213, https://doi.org/10.3390/s150100194, 2014. a, b

Dumic, E., Duarte, C. R., and da Silva Cruz, L. A.: Subjective evaluation and objective measures for point clouds – State of the art, in: 2018 First International Colloquium on Smart Grid Metrology (SmaGriMet), 1–5, IEEE, https://doi.org/10.23919/SMAGRIMET.2018.8369848, 2018. a

Gardner, J., Stelter, C., Sauti, G., Kim, J.-W., Yashin, E., Wincheski, R., Schniepp, H., and Siochi, E.: Environment control in additive manufacturing of high-performance thermoplastics, The Int. J. Adv. Manufact. Technol., 119, 1–11, https://doi.org/10.1007/s00170-020-05538-w, 2022. a

Greeshma, J., Iven, J., and Sujatha, S.: Breast cancer detection: A comparative review on passive and active thermography, Infrared Phys. Techn., 134, 104932, https://doi.org/10.1016/j.infrared.2023.104932, 2023. a

Grubišić, I., Gjenero, L., Lipić, T., Sović, I., and Skala, T.: Active 3D scanning based 3D thermography system and medical applications, in: 2011 Proceedings of the 34th International Convention MIPRO, 269–273, IEEE, https://ieeexplore.ieee.org/abstract/document/5967063 (last access: 27 May 2024), 2011. a

Hackel, T., Wegner, J. D., and Schindler, K.: Fast semantic segmentation of 3D point clouds with strongly varying density, ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci., III-3, 177–184, https://doi.org/10.5194/isprsannals-III-3-177-2016, 2016. a

Ham, Y. and Golparvar-Fard, M.: An automated vision-based method for rapid 3D energy performance modeling of existing buildings using thermal and digital imagery, Adv. Eng. Inform., 27, 395–409, https://doi.org/10.1016/j.aei.2013.03.005, 2013. a

Hellstein, P. and Szwedo, M.: 3D thermography in non-destructive testing of composite structures, Meas. Sci. Technol., 27, 124006, https://doi.org/10.1088/0957-0233/27/12/124006, 2016. a

Hoppe, H., DeRose, T., Duchamp, T., McDonald, J., and Stuetzle, W.: Surface Reconstruction from Unorganized Points, SIGGRAPH Comput. Graph., 26, 71–78, https://doi.org/10.1145/142920.134011, 1992. a

Intel-RealSense: Intel RealSense: Product Family D400 Series, https://www.intelrealsense.com/wp-content/uploads/2023/07/Intel-RealSense-D400-Series-Datasheet-July-2023.pdf (last access: 27 May 2024), 2023. a

International Telecommunication Union/ITU-R Radiocommunication Sector of ITU: Methodology for the subjective assessment of the quality of television pictures, ITU-R BT.500-13, https://www.itu.int/dms_pubrec/itu-r/rec/bt/R-REC-BT.500-13-201201-I!!PDF-E.pdf (last access: 27 May 2024), 2012. a

Jutzi, B. and Gross, H.: Nearest Neighbor classification on Laser point clouds to gain object structures from buildings. High-resolution earth imaging for geospatial information, in: International Archives of Photogrammetry and Remote Sensing, Vol. 38, Copernicus Publications, publisher: Copernicus Publications, ISSN 1682-1777, 2009. a

Keller, M., Lefloch, D., Lambers, M., Izadi, S., Weyrich, T., and Kolb, A.: Real-Time 3D Reconstruction in Dynamic Scenes Using Point-Based Fusion, in: 2013 International Conference on 3D Vision – 3DV 2013, 1–8, IEEE, https://doi.org/10.1109/3DV.2013.9, 2013. a

Lagüela, S., Martínez, J., Armesto, J., and Arias, P.: Energy efficiency studies through 3D laser scanning and thermographic technologies, Energ. Buildings, 43, 1216–1221, https://doi.org/10.1016/j.enbuild.2010.12.031, 2011. a

Mallet, C., Bretar, F., Roux, M., Soergel, U., and Heipke, C.: Relevance assessment of full-waveform lidar data for urban area classification, ISPRS J. Photogramm., 66, S71–S84, https://doi.org/10.1016/j.isprsjprs.2011.09.008, 2011. a

Mekuria, R. and Cesar, P.: MP3DG-PCC, Open Source Software Framework for Implementation and Evaluation of Point Cloud Compression, in: Proceedings of the 24th ACM International Conference on Multimedia, MM '16, 1222–1226, Association for Computing Machinery, New York, NY, USA, ISBN 9781450336031, https://doi.org/10.1145/2964284.2973806, 2016. a

Mendez, M.: MT-MRT/JSSS-3DTS-Processing_Code: First version, 3D-Thermograms, Zenodo [code], https://doi.org/10.5281/zenodo.11355331, 2024. a

Mendez, M. and Schramm, S.: Datasets obtained from the 3DTS with the RealSense version (RS) and the MotionCam (MC3D), Zenodo, https://doi.org/10.5281/zenodo.8391050, 2023. a

Mendez, M., Schramm, S., Schmoll, R., and Kroll, A.: Integration of a High-Precision 3D Sensor into a Thermography System, in: Sensor and Measurement Science International, AMA Association for Sensors and Measurement, https://doi.org/10.5162/SMSI2023/E5.2, 2023. a

Ng, Y. and Du, R.: Acquisition of 3D surface temperature distribution of a car body, in: 2005 IEEE International Conference on Information Acquisition, 16–20, IEEE, ISBN 978-0-7803-9303-5, https://doi.org/10.1109/ICIA.2005.1635046, 2005. a

Optris-GmbH: Technical details – Infrared camera optris Pl400i/Pl 450i, https://www.optris.de/infrarotkamera-optris-pi400i-pi450i (last access: 27 May 2024), 2024. a

Ordóñez Müller, A. and Kroll, A.: Generating High Fidelity 3-D Thermograms With a Handheld Real-Time Thermal Imaging System, IEEE Sens. J., 17, 774–783, https://doi.org/10.1109/JSEN.2016.2621166, 2017. a, b

Pauly, M., Gross, M., and Kobbelt, L.: Efficient simplification of point-sampled surfaces, in: IEEE Visualization 2002, 163–170, IEEE, https://doi.org/10.1109/VISUAL.2002.1183771, 2002. a, b

Pfister, H., Zwicker, M., Van-Baar, J., and Gross, M.: Surfels: surface elements as rendering primitives, in: SIGGRAPH00: The 27th Internationl Conference on Computer Graphics and Interactive Techniques Conference, 335–342, ACM Press/Addison-Wesley Publishing Co., https://doi.org/10.1145/344779.344936, 2000. a

Photoneo: MotionCam-3D M, https://www.photoneo.com/es/products/motioncam-3d-m/ (last access: 27 May 2024), 2024. a

Prisacariu, V. A., Kähler, O., Golodetz, S., Sapienza, M., Cavallari, T., Torr, P. H. S., and Murray, D. W.: InfiniTAM v3: A Framework for Large-Scale 3D Reconstruction with Loop Closure, CoRR, abs/1708.00783, http://arxiv.org/abs/1708.00783 (last access: 27 May 2024), 2017. a

Rangel, J. and Soldan, S.: 3D Thermal Imaging: Fusion of Thermography and Depth Cameras, in: Proceedings of the 2014 International Conference on Quantitative InfraRed Thermography, QIRT Council, https://doi.org/10.21611/qirt.2014.035, 2014. a

Rusu, R. B.: Semantic 3D object maps for everyday manipulation in human living environments, Ph.D. thesis, Technical University Munich, Germany, https://d-nb.info/997527676/34 (last access: 27 May 2024), 2009. a

Salas-Moreno, R. F., Glocken, B., Kelly, P. H. J., and Davison, A. J.: Dense planar SLAM, in: 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 157–164, IEEE, https://doi.org/10.1109/ISMAR.2014.6948422, 2014. a

Schmoll, R., Schramm, S., Breitenstein, T., and Kroll, A.: Method and experimental investigation of surface heat dissipation measurement using 3D thermography, J. Sens. Sens. Syst., 11, 41–49, https://doi.org/10.5194/jsss-11-41-2022, 2022. a, b

Schramm, S. and Ebert, J.: MT-MRT/MRT-Coded-Calibration-Target: First release, v1.0.0, Zenodo [code], https://doi.org/10.5281/zenodo.4596313, 2021. a

Schramm, S., Osterhold, P., Schmoll, R., and Kroll, A.: Generation of Large-Scale 3D Thermograms in Real-Time Using Depth and Infrared Cameras, in: Proceedings of the 2020 International Conference on Quantitative InfraRed Thermography, QIRT Council, https://doi.org/10.21611/qirt.2020.008, 2020. a, b, c, d, e, f, g, h

Schramm, S., Ebert, J., Rangel, J., Schmoll, R., and Kroll, A.: Iterative feature detection of a coded checkerboard target for the geometric calibration of infrared cameras, J. Sens. Sens. Syst., 10, 207–218, https://doi.org/10.5194/jsss-10-207-2021, 2021a. a, b, c

Schramm, S., Rangel, J., Aguirre, D., Schmoll, R., and Kroll, A.: Target Analysis for the Multispectral Geometric Calibration of Cameras in Visual and Infrared Spectral Range, IEEE Sens. J., 21, 2159–2168, https://doi.org/10.1109/JSEN.2020.3019959, 2021b. a, b, c, d

Schramm, S., Rangel, J., Aguirre, D., and Schmoll, R.: MT-MRT/MRT-Camera-Calibration-Toolbox: v1.1.1, v1.1.1, Zenodo [code], https://doi.org/10.5281/zenodo.11367429, 2024. a

Sels, S., Verspeek, S., Ribbens, B., Bogaerts, B., Vanlanduit, S., Penne, R., and Steenackers, G.: A CAD matching method for 3D thermography of complex objects, Infrared Phys. Techn., 99, 152–157, https://doi.org/10.1016/j.infrared.2019.04.014, 2019. a

Tian, D., Ochimizu, H., Feng, C., Cohen, R., and Vetro, A.: Geometric distortion metrics for point cloud compression, in: 2017 IEEE International Conference on Image Processing (ICIP), 3460–3464, IEEE, https://doi.org/10.1109/ICIP.2017.8296925, 2017. a

Vidas, S. and Moghadam, P.: HeatWave: A handheld 3D thermography system for energy auditing, Energ. Buildings, 66, 445–460, https://doi.org/10.1016/j.enbuild.2013.07.030, 2013. a

Vidas, S., Moghadam, P., and Bosse, M.: 3D thermal mapping of building interiors using an RGB-D and thermal camera, in: 2013 IEEE International Conference on Robotics and Automation, 2311–2318, IEEE, ISBN 978-1-4673-5643-5 978-1-4673-5641-1, https://doi.org/10.1109/ICRA.2013.6630890, 2013. a

Vidas, S., Moghadam, P., and Sridharan, S.: Real-Time Mobile 3D Temperature Mapping, IEEE Sens. J., 15, 1145–1152, https://doi.org/10.1109/JSEN.2014.2360709, 2015. a

Wang, C., Cho, Y. K., and Gai, M.: As-Is 3D Thermal Modeling for Existing Building Envelopes Using a Hybrid LIDAR System, J. Comput. Civil Eng., 27, 645–656, https://doi.org/10.1061/(ASCE)CP.1943-5487.0000273, 2013. a

Weinmann, M., Jutzi, B., and Mallet, C.: Feature relevance assessment for the semantic interpretation of 3D point cloud data, ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci., II-5/W2, 313–318, https://doi.org/10.5194/isprsannals-II-5-W2-313-2013, 2013. a

Weise, T., Wismer, T., Leibe, B., and Van Gool, L.: In-hand scanning with online loop closure, in: 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, 1630–1637, IEEE, https://doi.org/10.1109/ICCVW.2009.5457479, 2009. a

Whelan, T., Kaess, M., Johannsson, H., Fallon, M., Leonard, J. J., and McDonald, J.: Real-time large-scale dense RGB-D SLAM with volumetric fusion, The Int. J. Robot. Res., 34, 598–626, https://doi.org/10.1177/0278364914551008, 2015a. a

Whelan, T., Leutenegger, S., Salas Moreno, R., Glocker, B., and Davison, A.: ElasticFusion: Dense SLAM Without A Pose Graph, in: Robotics: Science and Systems XI, Robotics: Science and Systems Foundation, https://doi.org/10.15607/RSS.2015.XI.001, 2015b. a, b

Zhang, J., Huang, W., Zhu, X., and Hwang, J.-N.: A subjective quality evaluation for 3D point cloud models, in: 2014 International Conference on Audio, Language and Image Processing, 827–831, IEEE, https://doi.org/10.1109/ICALIP.2014.7009910, 2014. a

Zhang, Z., Sun, W., Min, X., Wang, T., Lu, W., and Zhai, G.: No-Reference Quality Assessment for 3D Colored Point Cloud and Mesh Models, IEEE Trans. Circuits Syst. Video Technol., 32, 7618–7631, https://doi.org/10.1109/TCSVT.2022.3186894, 2022. a, b, c